Can Googlebot crawl speed be controlled to avoid server overload?

Can Googlebot's crawl speed be controlled to avoid server overload?

-

I seem to be having a problem with server overload and am wondering if there is some way to control the rate at which Google crawls our site?

Answer:

The short answer is yes, BUT you should bear in mind that if Googlebot is overloading your server with crawl requests, then your problem may be that your website is running on budget hosting or the hosting environment is misconfigured. These are problems you should look into and fix as soon as possible.Beyond that, since Googlebot does not use the robots.txt crawl-delay directive, you'll need to use the tool in Google Search Console (GSC) which enables you to slow down the speed at which Googlebot crawls your site.

You'll find the tool at: https://www.google.com/webmasters/tools/settings

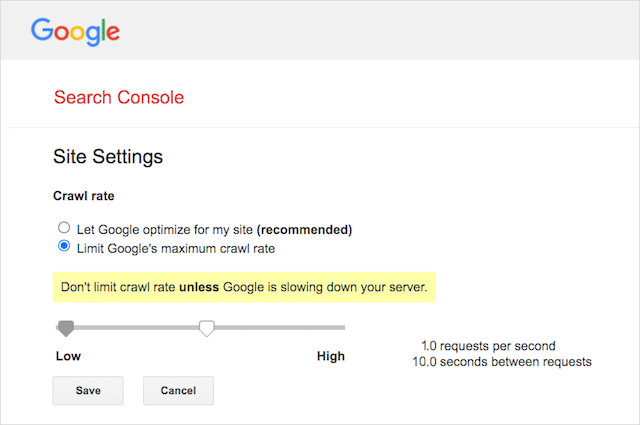

After choosing the site you want define the crawl rate for, you'll see the default crawl is set to "Let Google optimize for my site (recommended)" — Click "Limit Google’s maximum crawl rate" and then set the slider for the crawl rate you prefer.

It looks like this...

...and it'll take Google a day or so to adjust to your change.

Here's the support doc where you can learn everything you need to know about limiting or adjusting Googlebot's crawl rate. Go here to learn about asking Google to recrawl your URLs.